Starting Monday, YouTube creators will be required to label when realistic-looking videos were made using artificial intelligence, part of a broader effort by the company to be transparent about content that could otherwise confuse or mislead users.

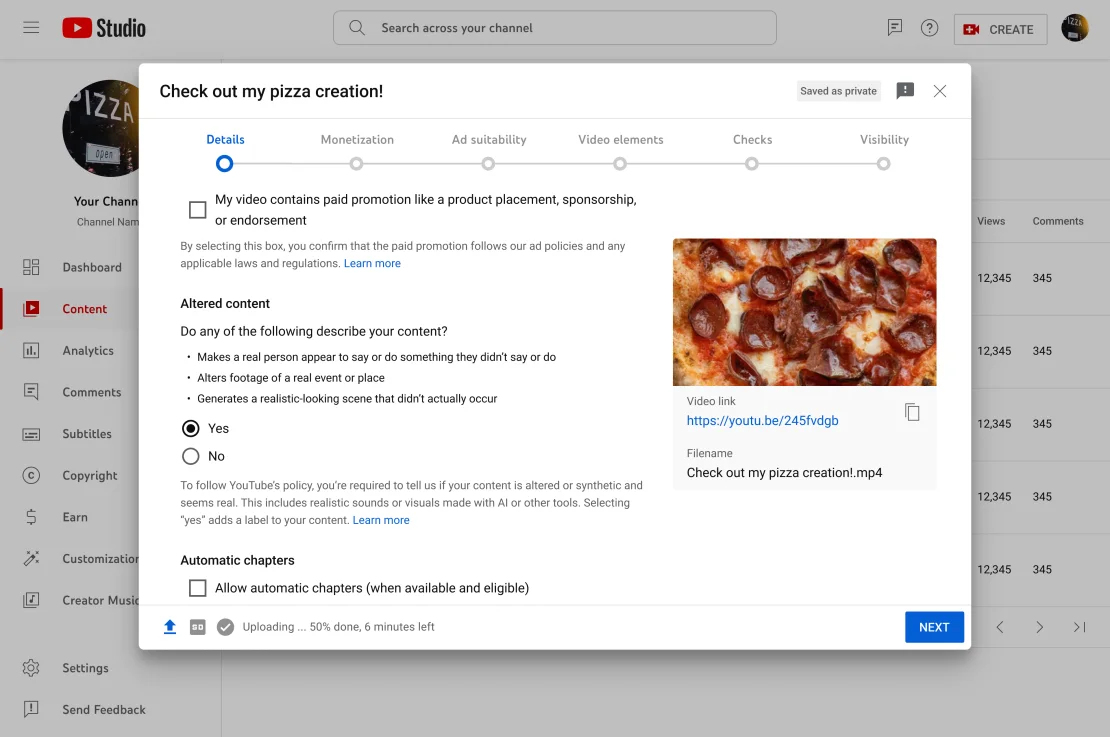

When a user uploads a video to the site, they will see a checklist asking if their content makes a real person say or do something they didn’t do, alters footage of a real place or event, or depicts a realistic-looking scene that didn’t actually occur.

The disclosure is meant to help prevent users from being confused by synthetic content amid a proliferation of new, consumer-facing generative AI tools that make it quick and easy to create compelling text, images, video and audio that can often be hard to distinguish from the real thing. Online safety experts have raised alarms that the proliferation of AI-generated content could confuse and mislead users across the internet, especially ahead of elections in the United States and elsewhere in 2024.

YouTube creators will be required to identify when their videos contain AI-generated or otherwise manipulated content that appears realistic — so that YouTube can attach a label for viewers — and could face consequences if they repeatedly fail to add the disclosure. The platform announced that the update would be coming in the fall, as part of a larger rollout of new AI policies.

When a YouTube creator reports that their video contains AI-generated content, YouTube will add a label in the description noting that it contains “altered or synthetic content” and that the “sound or visuals were significantly edited or digitally generated.” For videos on “sensitive” topics such as politics, the label will be added more prominently on the video screen.

Content created with YouTube’s own generative AI tools, which rolled out in September, will also be labeled clearly, the company said last year. YouTube will only require creators to label realistic AI-generated content that could confuse viewers into thinking it’s real.

Creators won’t be required to disclose when the synthetic or AI-generated content is clearly unrealistic or “inconsequential,” such as AI-generated animations or lighting or color adjustments. The platform says it also won’t require creators “to disclose if generative AI was used for productivity, like generating scripts, content ideas, or automatic captions.”

Creators who consistently fail to use the new label on synthetic content that should be disclosed may face penalties such as content removal or suspension from YouTube’s Partner Program, under which creators can monetize their content.